State of symbolic shapes: Jul 15, 2023 edition

Previous update: State of symbolic shapes branch - #61 by ezyang

Executive summary

- Dynamic shapes now support mode=“reduce-overhead” (CUDA graphs). Conventional wisdom was that dynamic shapes are incompatible with CUDA graphs, because any given CUDA graph recording can only work for a single static shape, and CUDA graphs requirement of hard coded memory addresses means that each CUDA graph takes up quite a lot of CUDA memory. However, this conventional wisdom is wrong: (1) multiple CUDA graphs can share the same memory pool, as long as you don’t have any live tensors from one pool to the next (this is precisely what CUDA graph trees by @eellison implements), and (2) recording a CUDA graph is much, much cheaper than running the entire PT2 compilation stack, so it is profitable to compile a dynamic program once and then CUDA graph it multiple times. https://github.com/pytorch/pytorch/pull/105064 realizes these gains and switches our dynamic shapes benchmark configuration to use CUDA graphs, resulting in hefty performance gains with only a modest increase in compile time. Importantly, these benchmarks cover our _generate inference benchmarks, which actually make use of multiple sizes as sequence length varies. There’s more to be done here: our memory usage for this use case can be suboptimal, because the caching allocator doesn’t know that it’s OK to waste space for small allocations by fitting them inside larger allocations for a larger dynamic size. We also observed that folks using this CUDA graphs trick tend not to generate CUDA graphs for every size, but instead prefer to linearly sample sizes and pad; we should make it easier to do this (perhaps with a padding tensor subclass.) One cool result is a 6x performance improvement on cm3leon, a newly announced multi-modal model from Meta AI.

- New API: torch._dynamo.maybe_mark_dynamic. Add torch._dynamo.maybe_mark_dynamic lets you suggest that we should try compiling a tensor dynamically, but doesn’t raise an error if it gets specialized (unlike

mark_dynamic). - Infer valid input sizes from programs. Horace has wanted this for some time, and with Yukio’s recent Z3 translation validation work landed, it turned out to be pretty easy to write a PoC to exhaustively search the space of valid inputs, using guards to turn us away from portions of the space we’ve seen before. Check it out at dinfer.py · GitHub. If anyone is interested in productionizing this, it would be a neat little project to (1) put this code in PyTorch and put a nicer API on it (note that as written, you have to specify the input dimensions and dtypes of input tensors, so you’ll need to figure out a good way of specifying or inferring this info), (2) improve the solving code to minimize the generated sizes for an equivalence class, and (3) use it for something cool; e.g., you could use it to automatically generate sample inputs for OpInfo tests. Tag me (@ezyang) as reviewer if you send a PR!

- Enabling automatic_dynamic_shapes in fbcode, for real this time. It turns out that I failed to actually turn things on in fbcode last time, so actually do it for real this time: Switch automatic_dynamic_shapes to True by default in fbcode. This got reverted once for breaking an internal model unit test (Incorrect ValueRanges analysis · Issue #105097 · pytorch/pytorch · GitHub, fixed by Perform value range analysis with rationals when possible by lezcano · Pull Request #105137 · pytorch/pytorch · GitHub, thanks @Lezcano for the speedy fix.) At time of writing, the PR has not actually hit fbcode yet.

- lit_llama is finally landed in torchbench. Add lit-llama benchmarks (logits, autoregressive generation, lora fine tuning) by ezyang · Pull Request #1730 · pytorch/benchmark · GitHub At time of writing this model is in canary models because the weight download is a little flaky. This is the only 7B model in our benchmark suite and there’s a bit of pain associated with this; for example, we can’t run accuracy tests on this model, because accuracy tests are implemented by holding two copies of the model in memory, which we can’t do at 7B parameters.

- Notable bug fixes.

- Transmute refined SymInt into int makes it more likely you’ll get an int rather than a SymInt if the SymInt got specialized into a constant. This sometimes caused some bugs with downstream components that can handle SymInt but choke on int.

- Fix AttributeError(“‘constexpr’ object has no attribute ‘type’”); another fix for HF llama

- Coming soon: Immediately compile backwards graph in AOTAutograd if dynamic shapes will fix “guard ignored, could cause correctness problems” warning. It’s waiting on review right now.

- Notable new bugs.

- Inductor backend for CPU inference extremely slow - this bug actually seems to be fixed on main thanks to dynamic shapes. Moral of the story: if you want dynamic shapes, use a nightly! We have soooo many improvements.

- StableDiffusion with dynamic=True still recompiles

CI skips. -3, -1, -1, -2 (no change).

**Training dashboard (as of 7b4d080496). This week on HUD

| Metric | Torchbench | Huggingface | TIMM models | Dynamic |

|---|---|---|---|---|

| Passrate | 89%, 57/64 → 92%, 59/64 | 98%, 45/46 → 96%, 44/46 | 97%, 58/60 → 98%, 59/60 | 88%, 7/8 → 100%, 8/8 |

| Speedup | 1.11x → 1.52x | 1.60x → 1.66x | 1.20x → 1.27x | 1.30x → 1.93x |

| Comptime | 97s → 86s | 124s → 120s | 178s → 142s | 40s → 42s |

| Memory | 0.80x | 0.97x | 1.00x → 1.01x | 0.73x → 0.69x |

- Now passing: hf_Longformer (this used to fail with

ValueError: Cannot view a tensor with shape torch.Size([4, 12, 1024, 513]) and strides (6303744, 513, 6156, 1) as a tensor with shape (48, 4, 256, 513), this is thanks to Brian Hirsh finally landing his AOTAutograd longformer fix), vision_maskrcnn (flaky), eca_botnext26ts_256 and mobilevit_s (used to timeout; maybe the speedup from CUDA graphs was enough to get it under the timeout again) - Now failing: DebertaV2ForQuestionAnswering (failing accuracy due to cudagraphs, failing on inductor_with_cudagraphs too), cait_m36_384 (OOMing on accuracy due to increased CUDA graph memory usage)

- Speedups: The majority of our speedups are due to the enablement of CUDA graphs for dynamic shapes. Some notable models and their speedups: BERT_pytorch (1.7698 → 3.3071), hf_GPT2 (1.7728 → 2.0056), basic_gnn_gin (1.3151 → 2.4841). The improvements on HF and TIMM models are much more modest since these are not super overhead bound models. Note that these numbers are still behind inductor_with_cudagraphs, because we are still losing some optimizations from running the PT2 compiler stack without static shapes.

- Slowdowns: dlrm (infra failure due to cudagraphs, failing on inductor_with_cudagraphs too), hf_T5 (2.0252 → 1.8939, oddly enough–could this be due to memory pressure? But even more weirdly, hf_T5_large imporved perf)

- Comptime/Memory: By in large compilation time did not increase, but for our training setup this is expected as we only actually run at one batch size, so you are simply measuring the cost of a single CUDA graph recording. As expected, memory compression ratio gets worse, due to standing allocation from CUDA graphs.

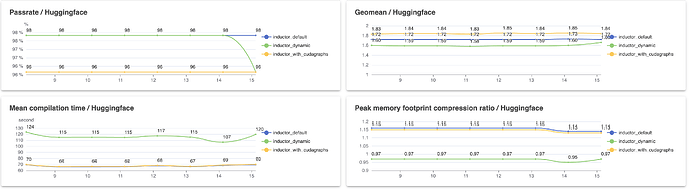

Inference dashboard (as of 7b4d080496). This week on HUD

| Metric | Torchbench | Huggingface | TIMM models | Dynamic |

|---|---|---|---|---|

| Passrate | 86%, 63/73 → 88%, 64/73 | 98%, 45/46 | 100%, 60/60 | 58%, 7/12 |

| Speedup | 1.52x → 1.50x | 1.64x → 1.76x | 1.73x → 1.62x | 1.96x → 2.94x |

| Comptime | 28s → 36s | 44s → 46s | 34s | 53s → 72s |

| Memory | 0.67x → 0.68x | 1.11x | 0.84x → 0.85x | 0.86x → 0.87x |

- Now passing: hf_Longformer (see training above)

- Speedups: torchbench numbers are actually a huge mixed bag. Here are some of the wins: BERT_pytorch (2.2317 → 2.4529), basic_gnn_edgecnn (1.7809 → 1.8732). Note that for some reason many of the GNN variants are failing performance on inference (but not accuracy), cm3leon_generate (1.3037 → 5.7822, WOW! This is consistent with some perf analysis Armen and I did months ago, where I concluded that cm3leon was hella overhead bound), hf_T5_generate (2.2619 → 8.2081), hf_T5_large (3.1690 → 5.1747)

- Slowdowns: A lot more models did worse with CUDA graphs enabled, including LearningToPaint (1.9209 → 1.6812), resnet18 (1.7779 → 1.4028), shufflenet_v2_x1_0 (1.9882 → 1.6010), squeezenet1_1 (1.8625 → 1.0040), yolov3 (2.0997 → 1.8843). It’s not entirely clear what’s going on here, but we will note that there was sizable dip in CUDA graphs performance without dynamic shapes too this week on torchbench. There is an across the board performance regression on TIMM models (and a slight regression on HuggingFace too.)

- Comptime/Memory: Comptime generally got worse across the board, but not too much worse. Particularly notable are the generate models: hf_T5_generate (881 → 1032), cm3leon_generate (131 → 203). CUDA graphs is not free, but given that we’re running at much more than two sequence lengths, you can see the bulk of the compile cost is the PT2 stack. For the most part, memory usage stayed fairly stable, interestingly enough.

What’s next?

- I think I want to investigate the memory planning situation with CUDA graphs a bit more; I also think it’s a good time to teach Inductor how to deal with data-dependent ops (without having to graph break on them.)