Our next PyTorch Core Maintainer Meeting is scheduled for Aug 15th.

The general purpose of these meetings is to:

-

Discuss any technical plans and roadmaps for PyTorch

-

Review any high-level requests related to PyTorch, such as:

-

Creating new modules

-

Adding new maintainers at the core, module, or library level

-

Resolving any disputes among maintainers

-

PyTorch core maintainers are the primary attendees, with module maintainers and others invited only per meeting, depending on the agenda. The agenda for this meeting will be added to this post as it is developed and meeting minutes will be published to dev-discuss.pytorch.org.

PyTorch Core Maintainers are:

Soumith Chintala

Edward Yang

Gregory Chanan

Dmytro Dzhulgakov

Nikita Shulga

Piotr Bialecki

Alban Desmasion

If you have any questions about PyTorch technical governance please refer to the information here:

Persons of Interest

Governance Overview

The agenda is being developed right now. I’ll follow up to this post with the agenda for visibility.

Any community member can propose agenda items by submitting them using the following link: Form

These minutes from the Aug 15th meeting were approved in the Dec 5th core maintainers meeting

Aug 15th meeting

Core Maintainers: Soumith Chintala, Gregory Chanan, Edward Yang, Dmytro Dzhugakov,Alban Desmaison, Nikita Shulga, Piotr Bialecki

Facilitator: Chris Gottbrath

Discussion on the submission form

-

Why not email?

-

Alban offered that we could perhaps use a pytorch based gmail account.

-

Soumith : Just use a google form .. simple, move on.

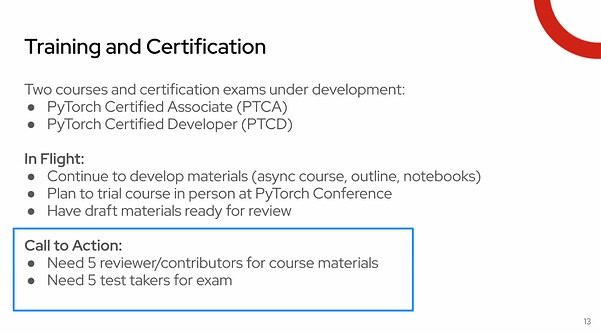

Reviewer of the certification

-

Nikita : Should we endorse the idea of certification at all?

-

Alban: There is a lot of demand .. so we could push back but that might be a bit of an uphill battle

-

Chris: we could establish standards and make sure that the materials meet those standards

-

Alban: I don’t want to write the certification book

-

Piotr: Have seen the requests as well. I volunteered to make sure that the information in the certification isn’t wrong

-

Greg: That makes sense. The thing I would want to be careful about is try to make sure that the core maintainers aren’t endorsing it, though one or more of the maintainers might also endorse.

-

Piotr: Review specifically around the GPU part

-

Chris: I think the ask was more for experts … than the core maintainers specifically endorsing the

- Core maintainer endorsement would be a separate thing

-

Others: we don’t want to write the materials

Roadmaps posted publicly

Core maintainers for the projects that remain in the github pytorch org

-

What do we want to do for the domain libraries

-

Alban: Should we host things that are critical packages for the offering

-

Nikita: We should have a concrete list before we can make a decision

-

Alban: for items where there isn’t an organization that could take

-

Greg: potentially conflating things .. process for what stays and what doesn’t

-

Alban: I think it is a grey area .. and the foundation needs to know who to defer to

-

Nikita: are we uniquely suited to refer and find folks who could maintain the code

-

Alban: There are infra implications ..

- Meta pytorch infra + a little bit of PTF infra team – are implicitly involved in resolving issues

-

Nikita: getting back to the main question: we should respond to the torchtune maintainer question .. and then also who would answer the question in a few months if torchtune remains in the foundation

-

Greg: I don’t think we should answer the question, at least now .. I don’t think we know what we [meta] want.

- Because the ask behind the ask is about infra support

-

Alban: I think that makes some sense

-

The reason why folks are asking to stay .. is because they want access infra

-

The other reason that this is complicated is that some projects are associated so closely with PyTorch that if something would go wrong it would damage the brand

-

Nikita: For torchvision – yes, we probably should keep

-

Greg: Proposes

-

Greg:

- We want to be careful about what we do .. but that plan might be we release it for a few more times then kill.

-

Alban:

- We should decide if taking ownership of a project is on the table or not

-

Nikita:

- CPU info as an example – I am willing to be on the hook for cpu info but that is something I can do as an individual, not necessarily signing up the core maintainers to also care

-

Alban

- There are dependency repos we are going to need to keep

-

Nikita

- If there are dependencies, we need to figure out how to handle that, which might mean removing the dependency vs maintaining it in perpetuity

-

Alban

- If we want to keep a specific package in core .. thinking is that there is an opportunity to keep it (from the PTF perspective)

-

Chris

- There can be more than one group of technical maintainers

Remove merge right for metamates to prevent things like [REDACTED]

-

Alban

-

Greg

-

Ideologically aligned

-

Concerned about agreeing without understanding the full context

-

Why aren’t we finding these through other processes

-

Are folks in this broader group making useful code mods

-

Nikita

-

Alban

-

Greg

- Can we get a list of such examples .. doesn’t seem like an unreasonable ask

-

Nikita

-

Probably about 15 of them

-

Bleeds into project health

-

Could potentially catch this with a stronger culture of module ownership

-

Greg

- Don’t want to merge these things together – don’t want to boil the ocean

-

Piotr

- Asking for clarification on the scenario

-

Aban

- The challenge is that the merge rules have some gaps and these PRs don’t trigger required reviews

-

Nikita

- Is there a way to create a tool to flag the anomalous PRs

-

Summary

FYI: Wheel variants is happening: PyTorch 2.8 Live Release Q&A

- 10x smaller wheels AND better UX? Yes!

Nomination of [redacted]