State of PT2: Nov 3, 2023 edition

Previous update: State of symbolic shapes branch - #71 by ezyang

Sorry about the month’s delay! Between more vacation and PTC there wasn’t much time to do a writeup over the weekend.

Executive summary

Big tickets

- PyTorch Conference happened! Thanks everyone who attended, there were lots of fun discussions. You can watch the talks at https://www.youtube.com/watch?v=dR0lHxt3Tjo&list=PL_lsbAsL_o2BivkGLiDfHY9VqWlaNoZ2O . Some fun in person discussions that I had: (1) with Pierre Guilmin, you can now torch.compile complex tensors: Add complex tensor with subclassing by pierreguilmin · Pull Request #48 · albanD/subclass_zoo · GitHub This is actually going to be the preferred way to torch.compile complex numbers as you Triton doesn’t support interleaved layout and you’re not going to get efficient matrix multiply that way anyway (because the built-in instructions don’t support complex.) (2) Ho Young Jhoo and Nuno Lopes had some interesting work on automatically pipelining NNs, it was quite interesting. (3) Jack Cao and I sketched out what single step graph should look like in Dynamo, track progress at [WIP] Dynamo single step graph by JackCaoG · Pull Request #112296 · pytorch/pytorch · GitHub

- PyTorch 2.2 release is coming! The branch cut will be Dec 1.

Dynamo

- We’ve been having lots of discussions about what it will take to get Dynamo to the same level of stability as long running compiler projects like HHVM or LLVM. Some thoughts about refactoring pieces at Refactoring Dynamo for stability - Google Docs As a smaller step, @voz has organized a weekly triage meeting for PT2 issues, separate from the regular PT2 weekly.

- @suo is taking a serious look at getting torchbind to work on PT2. Some basic design notes at PT2 torchbind - Google Docs ; we also discussed this at composability sync

- In the land of tracing FSDP, there is currently some grunging about in PyTorch’s accumulate grad implementation. It is fairly complicated, including logic that checks the reference count to decide whether or not to reuse a buffer inplace or not. There is some debate about whether or not there should be an accumulate grad aten op (@jansel implemented one that lowers all the way to inductor), or it should be traced through by Dynamo.

- Some work towards reducing the amount of guard administration is being made here [export] Skip guard propagation for export only. by zhxchen17 · Pull Request #112685 · pytorch/pytorch · GitHub which should materially improve Dynamo tracing speeds

- Some news about compiled optimizer from

@mlazos https://docs.google.com/document/d/1oHyw0RULF7UKZBCrOdVOzshlhiBFuTT7zKZd2xOGc3k/edit

Core libraries

- Ying Liu has been working on a tensor subclass for async execution. We discussed it in composability sync. The idea is that you can trigger an operation (typically communication) on a side stream, as well as some follow on operations, without having to literally move the follow on operations to the point where a sync happens. This also means that code in torchrec that has to be manually written as a pair of custom autograd functions for req/wait can be written in an intuitive, autograd style. We have a version that does this manually with callbacks (only queue kernels onto the stream at some known later point in time) and Ying is working on another version that uses streams only. One interesting thing we noticed that when you schedule allreduce in forwards first, backwards will naturally schedule it last, but you actually want the allreduce to happen ASAP! @albanD suggested we may be able to add an API to modify the priority order of autograd backwards, could be useful.

- There will be a new repo https://github.com/pytorch-labs/ao for some of the new quantization schemes we’re working on. We discussed this in composability sync.

- I did a long overdue update to record_stream docs at Add a note about performant record_stream use. by ezyang · Pull Request #112526 · pytorch/pytorch · GitHub after having some more discussions about it with @eellison who was trying to get cuda graph trees to work with record stream.

- We’ve been talking about this with Vincent for a while, but there is now a proposed PR to add TensorDict to PyTorch core, check it out: [RFC] Tensordict integration by vmoens · Pull Request #112441 · pytorch/pytorch · GitHub

Dynamic shapes

- repeat_interleave dynamic shapes support was reverted due to S376879, this revert may itself have caused a sev S377088. It turns out that this diff was not related to the SEV, so we are relanding it.

- torchrec dlrm with sharding and inductor works end-to-end, all the way through! Many of the changes have been merged upstream to torchrec/FBGEMM. Check https://docs.google.com/document/d/1VTGEh0MqadAsuRy0s5u39wQhNwMSVgCgYewivMcBbuU/edit#bookmark=id.bpg458y8bfaa for status. We’re far enough along that the internal folks are going to try to do some enablement on their reco models

- We had some discussion about supporting mark_dynamic and automatic dynamic on tensor subclasses. Some of the complication is around the fact that you can have sizes that only occur in the outer tensor but not the inner tensor, and vice versa. Check for notes: https://docs.google.com/document/d/1ipSxcTzEMMOAPvxP-YJlD5JBZZmIGgh8Q34ixtOUCRo/edit#heading=h.3px8g3br0skz

- Adnan has been running into a lot of “cannot guard on data dependent SymInts” and this has me wondering if we shouldn’t have a mode that automatically suggests what runtime asserts you ought to have. This lead to Tracing mode for unbacked SymInts using real data · Issue #112749 · pytorch/pytorch · GitHub

- If you need to force an input integer to be dynamic using mark_dynamic, one way to hack it is to pass a 0xN tensor instead of an int and then project out the int again with size(1).

- Notable new bugs

- AllenaiLongformerBase failing w/ dynamic shapes: “‘Pointwise’ object has no attribute ‘get_stride’”

- [dynamo]

.view([..., -1, ...])fails on Tensors with unbacked SymInts in the shape - the workaround we found was to manually replace i0 with i1 * 12 so that the modulus checks work out - pack_padded_sequence/pad_packed_sequence support in dynamo

- Operators that return dynamic-shape outputs that require_grad choke in AOTAutograd

- torch2.1.0 DDP+compile+dynamic_shape cause error - workaround with

optimize_ddp = False - [inductor][dynamic] fused_attention pattern could not be matched due to sym_size

- Notable fixes

- Guarantee expr is a sympy.Expr before xreplace’ing it

- Reland “Trigger specialization when you call size()/stride() from C++ (#111935)”

- Refine replacements with equality tests on runtime asserts

- Allow binary pointwise operations to cause refinement on unbacked SymInts

- Use OpOverload instead of OpOverloadPacket for size/stride/etc slots

- Convert evaluate_expr GuardOnDataDependentSymNode into graph break

- Don’t DCE unbacked SymInt if it is returned as shape constant buffer

- SymIntify convolution

- Don’t suppress original error message for data-dependent value

- Allow SymInt to specialize to FLOAT

- Force specialization on INT_LIST

- Don’t sympify reflection_pad2d ranges and Improve reflection_pad2d lowering for dynamic shapes

- Use torch._check for cat error checking

- Fix arange with dynamic end argument.

Numbers

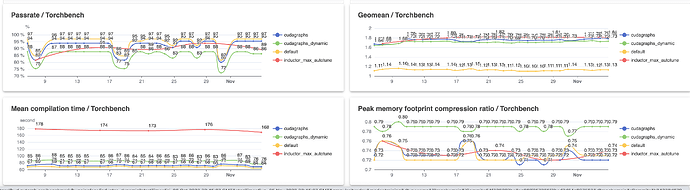

Training. 64f326097b dashboard

- TIMM improvement is from channels last optimization

Inference. 64f326097b dashboard

- 3% HF improvement from concat codgen on inference